Understanding Container Orchestration: The Role of Kubernetes and Containers

Container orchestration plays a critical role in managing containerized applications, enabling efficient deployment, scaling, and monitoring of containers across various environments. Kubernetes and containers are two essential components in this context. Containers provide a lightweight, portable, and consistent runtime environment for applications, while Kubernetes serves as a powerful platform for container orchestration, automating the deployment, scaling, and management of containerized applications.

Containers: A Brief Overview

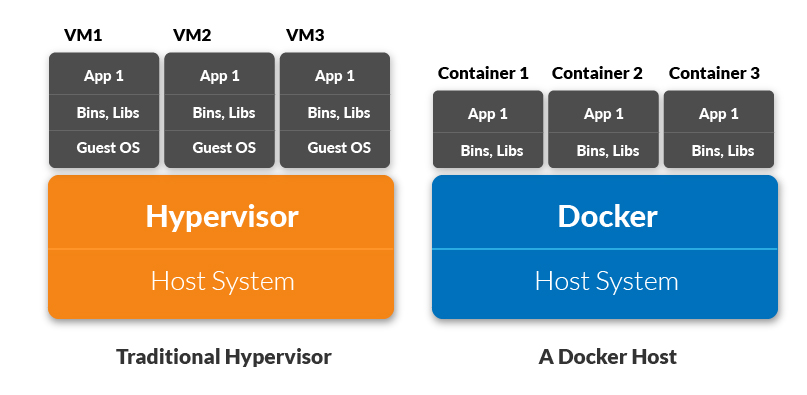

Containers are a lightweight, standalone, and executable software package that includes everything needed to run an application, such as code, libraries, system tools, and settings. They simplify application deployment and scaling by providing a consistent and portable runtime environment across different platforms and infrastructure. Popular container runtimes include Docker, containerd, and CRI-O, with Docker being the most widely recognized.

Containers offer several benefits, including:

- Isolation: Containers run applications in an isolated environment, preventing conflicts and ensuring consistent behavior across various systems.

- Portability: Containers are highly portable, allowing applications to run seamlessly on different platforms, from local development environments to production servers.

- Scalability: Containers simplify application scaling by enabling the deployment of multiple instances of an application with minimal overhead and resource utilization.

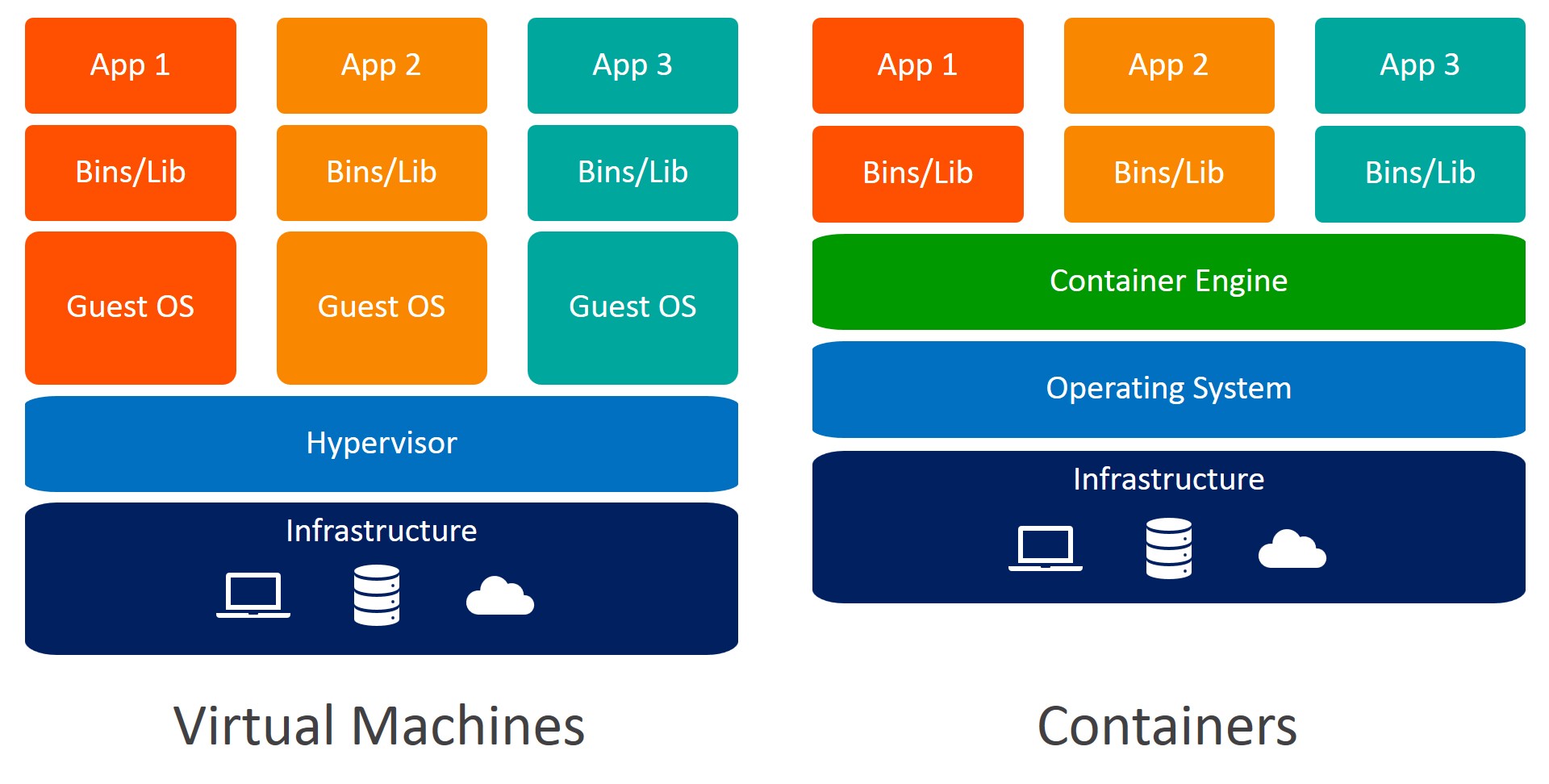

- Efficiency: Containers are lightweight and resource-efficient, consuming fewer resources than traditional virtual machines (VMs).

Kubernetes: A Powerful Container Orchestrator

Kubernetes is an open-source container orchestration platform designed to automate the deployment, scaling, and management of containerized applications. It provides a robust and flexible solution for managing containerized workloads at scale, simplifying the complexities of container management and enabling organizations to focus on delivering value to their customers.

Kubernetes offers several features and benefits, including:

- Automated deployment: Kubernetes automates the deployment of containerized applications, ensuring consistent and reliable deployment across various environments.

- Self-healing: Kubernetes continuously monitors the health of containerized applications and automatically restarts failed containers, replaces unresponsive nodes, and scales resources as needed.

- Load balancing: Kubernetes intelligently distributes network traffic across multiple container instances, ensuring optimal performance and resource utilization.

- Horizontal scaling: Kubernetes simplifies the process of scaling containerized applications horizontally, enabling organizations to quickly and easily add or remove container instances based on demand.

- Service discovery: Kubernetes automatically discovers and configures services within a cluster, simplifying the process of managing complex, distributed applications.

- Secret and configuration management: Kubernetes provides built-in support for managing secrets and configuration data, ensuring secure and consistent access to sensitive information across the entire application lifecycle.

By understanding the role of Kubernetes and its capabilities, organizations can make informed decisions about when and how to leverage Kubernetes for container orchestration, ultimately leading to more efficient, scalable, and resilient containerized applications.

Kubernetes Architecture: Nodes, Pods, and Services

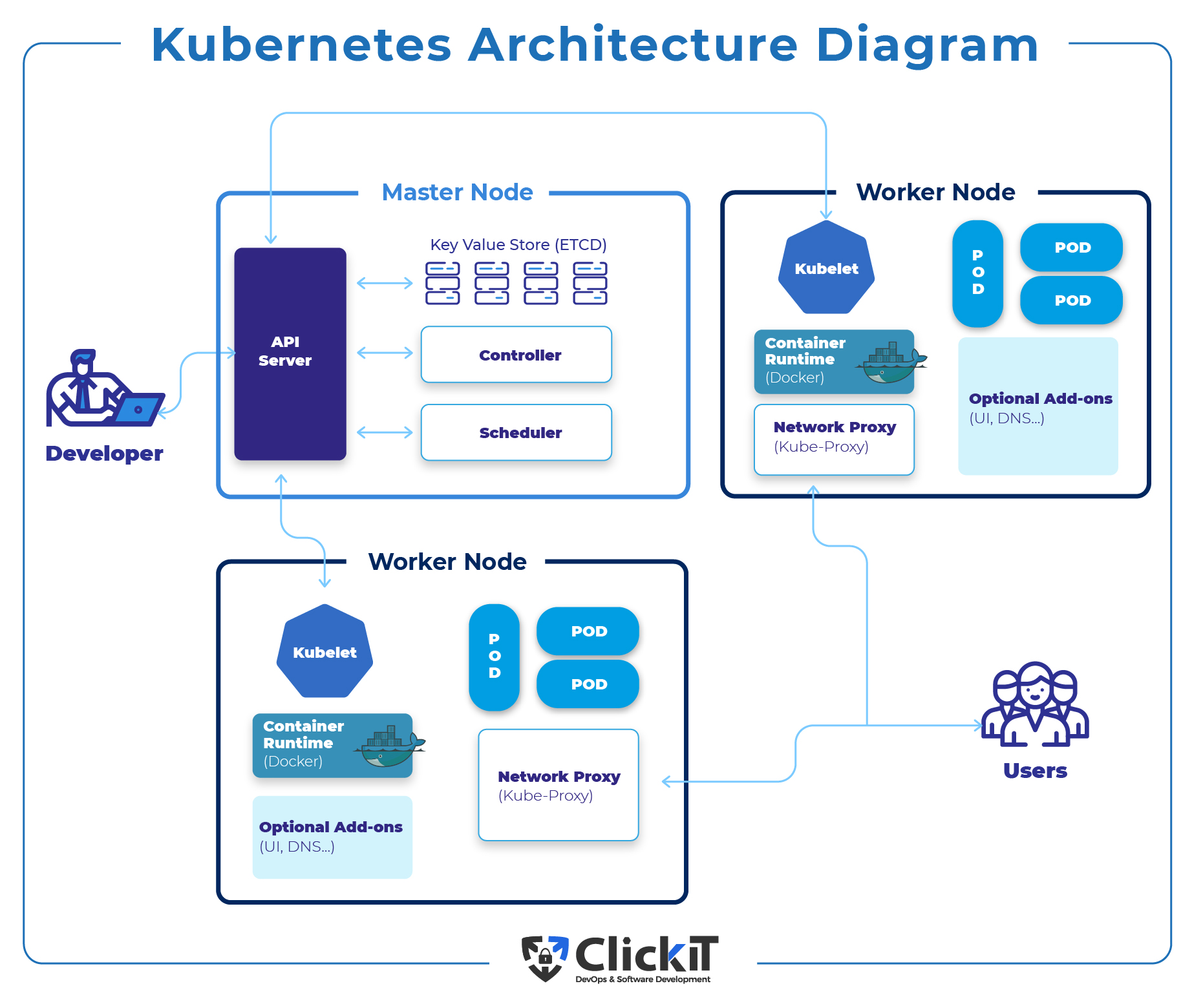

The fundamental building blocks of Kubernetes architecture include nodes, pods, and services. These components work together to manage containerized applications and provide a robust and flexible container orchestration solution.

Nodes

A node, also known as a worker node, is a worker machine in Kubernetes that hosts and runs containerized applications. Each node is managed by the master node and contains the necessary services and components to run and manage containerized applications, including the container runtime (e.g., Docker), the kubelet (an agent that communicates with the master node), and the kube-proxy (a network proxy that manages network communication between services).

Pods

A pod is the smallest deployable unit in Kubernetes and represents a single instance of a running process. Pods contain one or more containers and share the same network namespace, enabling direct communication between containers within the same pod. Pods also share storage resources and can be managed and scaled as a single unit. By abstracting the underlying infrastructure, pods simplify the management of containerized applications and enable efficient resource utilization.

Services

A service is an abstract representation of a set of pods that work together to provide a specific functionality or service. Services enable communication between pods, regardless of their location within the cluster, and provide a stable IP address and DNS name for accessing the underlying pods. Services can also be used to load balance network traffic, enabling efficient resource utilization and ensuring high availability for containerized applications.

By understanding the roles and interactions of nodes, pods, and services, organizations can effectively manage and orchestrate containerized applications using Kubernetes, ensuring efficient resource utilization, high availability, and resilience.

Key Differences: Kubernetes vs Containers

While Kubernetes and containers serve different purposes in the context of container orchestration, understanding their roles, use cases, and benefits is essential for effective container management. By recognizing the differences between Kubernetes and containers, organizations can make informed decisions about when and how to leverage each technology for optimal results.

Role and Use Cases

Containers provide a lightweight, portable, and consistent runtime environment for applications, simplifying deployment and scaling. Kubernetes, on the other hand, is a container orchestration platform designed to automate the deployment, scaling, and management of containerized applications at scale.

Containers are ideal for simple applications, development environments, and learning purposes, while Kubernetes is best suited for managing large-scale, complex, and distributed applications. Kubernetes enables efficient resource utilization, high availability, and resilience, making it an ideal choice for organizations seeking to manage and scale containerized applications in production environments.

Benefits

Containers offer several benefits, including:

- Isolation: Containers run applications in an isolated environment, preventing conflicts and ensuring consistent behavior across various systems.

- Portability: Containers are highly portable, allowing applications to run seamlessly on different platforms, from local development environments to production servers.

- Scalability: Containers simplify application scaling by enabling the deployment of multiple instances of an application with minimal overhead and resource utilization.

- Efficiency: Containers are lightweight and resource-efficient, consuming fewer resources than traditional virtual machines (VMs).

Kubernetes offers additional benefits, including:

- Automated deployment: Kubernetes automates the deployment of containerized applications, ensuring consistent and reliable deployment across various environments.

- Self-healing: Kubernetes continuously monitors the health of containerized applications and automatically restarts failed containers, replaces unresponsive nodes, and scales resources as needed.

- Load balancing: Kubernetes intelligently distributes network traffic across multiple container instances, ensuring optimal performance and resource utilization.

- Horizontal scaling: Kubernetes simplifies the process of scaling containerized applications horizontally, enabling organizations to quickly and easily add or remove container instances based on demand.

- Service discovery: Kubernetes automatically discovers and configures services within a cluster, simplifying the process of managing complex, distributed applications.

- Secret and configuration management: Kubernetes provides built-in support for managing secrets and configuration data, ensuring secure and consistent access to sensitive information across the entire application lifecycle.

By understanding the key differences between Kubernetes and containers, organizations can effectively leverage each technology for their unique needs, ensuring efficient, scalable, and resilient containerized applications.

When to Use Containers Without Kubernetes

While Kubernetes offers numerous benefits for managing containerized applications at scale, there are scenarios where using containers without Kubernetes makes sense. In these situations, containers can provide a lightweight, portable, and consistent runtime environment for applications, simplifying deployment and scaling without the need for a full-fledged container orchestration platform.

Simple Applications

For simple applications with limited functionality, using containers without Kubernetes can be a more straightforward and efficient approach. In these cases, containers can be managed manually or with simple scripts, reducing the complexity and overhead associated with Kubernetes.

Development Environments

Containers can also be useful in development environments, where rapid iteration and experimentation are common. By using containers without Kubernetes, developers can quickly spin up and tear down application instances, test changes in isolated environments, and collaborate more effectively with their teams.

Learning Purposes

For those new to containerization and container orchestration, using containers without Kubernetes can be an excellent way to learn the fundamentals of container technology. By working with containers directly, users can gain a deeper understanding of containerization concepts and benefits before diving into the complexities of Kubernetes.

While using containers without Kubernetes can be beneficial in certain scenarios, it is essential to recognize the limitations of this approach. As applications grow in complexity and scale, managing containers manually or with simple scripts can become increasingly challenging and time-consuming. In these situations, adopting a container orchestration platform like Kubernetes can help organizations streamline container management, ensure high availability, and enable efficient resource utilization.

When to Adopt Kubernetes for Container Orchestration

Kubernetes is an ideal choice for container orchestration in situations where managing large-scale, complex, and distributed applications is essential. Its powerful features, benefits, and capabilities enable organizations to streamline container management, ensure high availability, and enable efficient resource utilization. By understanding when to adopt Kubernetes for container orchestration, organizations can make informed decisions about their container management strategies and ensure long-term success.

Managing Large-Scale Applications

As applications grow in size and complexity, managing containerized applications manually or with simple scripts can become increasingly challenging and time-consuming. Kubernetes simplifies the management of large-scale applications by automating deployment, scaling, and management tasks, ensuring consistent and reliable performance across various environments.

Complex Application Architectures

For applications with complex architectures, Kubernetes offers a robust and flexible solution for managing containerized workloads. By abstracting the underlying infrastructure, Kubernetes enables organizations to focus on delivering value to their customers, rather than managing the intricacies of container deployment and scaling.

Distributed Applications

Kubernetes is designed to manage distributed applications, enabling efficient resource utilization, high availability, and resilience. By automatically discovering and configuring services within a cluster, Kubernetes simplifies the process of managing complex, distributed applications, ensuring optimal performance and resource utilization.

Microservices Architectures

For organizations adopting microservices architectures, Kubernetes offers a powerful platform for managing and scaling individual microservices. By automating deployment, scaling, and management tasks, Kubernetes enables organizations to focus on developing and delivering high-quality microservices, rather than managing the underlying infrastructure.

By understanding when to adopt Kubernetes for container orchestration, organizations can make informed decisions about their container management strategies and ensure long-term success. To get started with Kubernetes and containers, consider the following steps:

- Learn the fundamentals of containerization and container orchestration with online courses, tutorials, and documentation.

- Experiment with containers and Kubernetes in a local development environment, using tools like Minikube or Docker Desktop.

- Deploy a simple application to a Kubernetes cluster, using a managed Kubernetes service like Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), or Azure Kubernetes Service (AKS).

- Follow best practices for successful container orchestration, including monitoring, logging, and security.

How to Get Started with Kubernetes and Containers

Getting started with Kubernetes and containers can be an exciting journey, offering numerous benefits for managing containerized applications at scale. To ensure a successful start, consider the following steps and resources for learning, tools for deployment, and best practices for container orchestration.

Learning Resources

To learn the fundamentals of containerization and container orchestration, consider the following resources:

- Docker Documentation: Comprehensive documentation on containerization, including getting started guides, best practices, and reference materials.

- Kubernetes Documentation: Official Kubernetes documentation, covering installation, deployment, configuration, and best practices.

- Udemy Courses: Online courses on Kubernetes and containerization, offering hands-on learning experiences and certifications.

Tools for Deployment

For deploying and managing Kubernetes clusters and containerized applications, consider the following tools:

- Minikube: A local Kubernetes cluster for development and testing, supporting multiple platforms and container runtimes.

- Kops: A production-grade Kubernetes installation tool, enabling the deployment of clusters on various cloud providers and on-premises infrastructure.

- Rancher: A comprehensive container management platform, offering Kubernetes distribution management, cluster deployment, and multi-cluster management.

Best Practices for Container Orchestration

To ensure successful container orchestration, consider the following best practices:

- Monitor and log containerized applications and Kubernetes clusters, using tools like Prometheus, Grafana, and Elasticsearch.

- Implement security best practices, including network policies, secrets management, and role-based access control (RBAC).

- Optimize resource utilization, using features like Kubernetes resource quotas, horizontal pod autoscaling, and vertical pod autoscaling.

- Plan for disaster recovery and high availability, using features like Kubernetes node affinity, pod disruption budgets, and multi-region deployments.

By following these steps and best practices, organizations can get started with Kubernetes and containers, ensuring successful container orchestration and long-term success.